What Is Multi-Cloud Kubernetes?

Multi-cloud Kubernetes involves deploying Kubernetes clusters across multiple cloud service providers. This setup enables organizations to leverage the strengths of each cloud provider and distribute workloads according to specific needs. By using multi-cloud strategies, businesses aim to avoid vendor lock-in, enhance availability, and improve performance by choosing optimal locations for workloads.

Multi-cloud Kubernetes supports scaling operations across borders without the limitations imposed by a singular cloud provider. It allows teams to run diverse workloads where they perform best, utilizing specific resources or compliance benefits of each cloud provider while maintaining a consistent environment.

Why Deploy a Kubernetes Cluster Across Multiple Clouds?

Reducing Geographic Latency and Related Performance Constraints

Reducing geographic latency is a primary reason for adopting a multi-cloud strategy. Organizations can deploy Kubernetes clusters nearer to end users across different regions, minimizing the time data travels between the user and the data center. This setup enhances application performance, ensuring high-speed and responsive user experiences, crucial for real-time applications.

Disaster Recovery Requirements

Multi-cloud Kubernetes meets disaster recovery requirements by enabling businesses to distribute workloads across various geographic regions. With this architecture, workloads can continue operating if one cloud provider experiences an outage. The redundancy built into multi-cloud systems minimizes downtime and data loss.

Regulatory or Compliance Factors

Regulatory compliance is another reason to deploy Kubernetes clusters across multiple cloud environments. Different regions have specific data governance laws, and a multi-cloud setup allows businesses to store and process data in compliance with these regulations. This approach supports data residency and privacy, avoiding penalties for non-compliance.

Multi-Cloud Kubernetes vs. Multi-Cluster Kubernetes

Multi-cloud Kubernetes involves managing Kubernetes clusters across different cloud providers, maximizing each provider’s unique benefits. It focuses on avoiding vendor lock-in and accommodating diverse compliance demands by spreading workloads strategically across multiple platforms.

Multi-cluster Kubernetes deals with deploying more than one cluster, commonly within a single cloud provider. This strategy helps manage workloads in varying environments, offering compartmentalized scaling and customized configuration for each workload. Managing multiple Kubernetes clusters across multiple clouds is also possible, but this increases complexity and often necessitates dedicated management tools.

- Use proactive autoscaling strategies: Implement proactive autoscaling by analyzing historical traffic patterns and cloud-specific pricing. This ensures optimal resource allocation during peak times across clouds, without over-provisioning, and helps avoid cloud-specific price spikes.

- Use policy-as-code for governance: Enforce security and compliance policies across clouds using policy-as-code tools like Open Policy Agent (OPA). This standardizes rules and supports consistent governance across different environments, reducing security risks and operational inconsistencies.

- Benchmark cloud providers for specific workloads: Not all clouds perform equally for all workloads. Continuously benchmark the performance of each provider for specific use cases, and dynamically shift workloads to the provider that offers the best price/performance ratio for the current demand.

- Implement multi-cloud cost anomaly detection: Beyond simple cost tracking, use AI-driven anomaly detection tools to identify unusual cost spikes early. This helps you address misconfigured resources or inefficient deployments before they result in significant cost overruns.

- Maximize cloud discounts and incentives: Each cloud provider offers unique credits and discounts for specific services. Regularly review and optimize usage to ensure you’re maximizing cloud-specific incentives, which can help significantly reduce overall cloud costs.

Challenges of Managing Multi-Cloud Kubernetes

Here are some of the challenges organizations face when managing multi-cloud Kubernetes.

Cluster Sprawl Across Environments

In a multi-cloud Kubernetes environment, new clusters are often created to handle specific applications or projects as organizations grow. Without strict governance, this can lead to a chaotic tangle of clusters that are challenging to manage and secure—a phenomenon known as cluster sprawl—increasing operational complexity and resource wastage.

To counteract this, businesses must implement strategic cluster lifecycle management practices. Automating cluster provisioning and de-provisioning can mitigate waste, providing efficient use of resources. Adequately tagged and documented clusters help maintain an organized system that is easier to audit and control.

Consistent Authentication and Access Control

Consistent authentication and access control in multi-cloud Kubernetes environments is another significant challenge. Each cloud may have separate identity management and authentication mechanisms, complicating identity and access management. Inconsistent policies can lead to security vulnerabilities and unauthorized access, putting sensitive data at risk.

To address these challenges, organizations should adopt centralized identity management solutions. Single sign-on (SSO) and federation across clusters can help unify access controls. By standardizing authentication procedures, businesses can enhance security, streamline user management, and reduce administrative overhead.

Lack of Centralized Control and Monitoring

A significant challenge in managing multi-cloud Kubernetes environments is the lack of centralized control and monitoring. With clusters spread over numerous environments, gaining a holistic view of operations becomes difficult. This fragmentation can lead to inefficient resource usage and delayed responses to anomalies or security threats.

Addressing this requires a comprehensive monitoring solution aggregating data from all clusters into a single interface. Tools like Andodot can visualize metrics across clouds, facilitating proactive management. Centralized monitoring enhances scalability, enables quicker fault resolution, and ensures optimal resource utilization.

Best Practices for Implementing Multi-Cloud Kubernetes

1. Design Loosely Coupled Applications

Designing loosely coupled applications allows them to function independently across various environments, enhancing flexibility and scalability. Updates or changes can occur without affecting the entire application by decoupling components, reducing downtime and operational risks.

This approach also simplifies adapting to different cloud providers’ services and APIs. By using microservices architecture, businesses optimize each service independently, enhancing performance and resiliency.

2. Use Cloud-Agnostic APIs

Using cloud-agnostic APIs lets businesses employ multi-cloud Kubernetes strategies. These APIs help maintain application portability across various cloud environments, reducing dependency on specific cloud provider services. By coding with abstraction layers, developers can easily integrate and migrate between clouds.

Cloud-agnostic APIs also streamline development processes using standardized protocols, encouraging consistent platform performance. This abstraction simplifies the deployment and management of applications in a multi-cloud environment.

3. Use Infrastructure as Code

Implementing infrastructure as code (IaC) can help manage multi-cloud Kubernetes environments. IaC automates the provisioning and configuration of infrastructure, reducing human error and increasing consistency. By codifying infrastructure, businesses can quickly replicate cloud environments, enhancing scalability and streamlining operations.

Organizations can version control their infrastructure using tools like Terraform and Kubernetes YAML manifests, making deployments reproducible and auditable. IaC allows for precise, repeatable deployments in multi-cloud strategies where consistency and speed are vital.

4. Implement Cross-Cluster Networking Management

Effective cross-cluster networking management is a necessity in multi-cloud Kubernetes setups. Establishing stable and efficient communication across cloud clusters can mitigate latency issues and ensure seamless data transfer. Leveraging networking tools and services that support multi-cloud architecture is vital for optimized cluster interactions.

VPN, cloud provider networking services can enhance cross-cluster networking. These innovations ensure secure and efficient connectivity, facilitating uninterrupted workflows.

5. Implement Centralized Logging and Distributed Tracing

Centralized logging and distributed tracing are fundamental practices in multi-cloud Kubernetes environments. Centralization consolidates logs from multiple clusters, aiding in comprehensive monitoring and faster troubleshooting. Distributed tracing follows service requests, diagnosing performance bottlenecks and understanding system dependencies.

Employing tools like OpenTelemetry and Jaeger can assist in implementing these practices effectively. Centralized logging and tracing systems provide insights into system behavior and performance, increasing operational visibility and simplifying debugging processes.

6. Consider Implementing a Service Mesh to Enhance Observability

A service mesh provides granular visibility into service-to-service communications by abstracting network logic away from application logic. This setup provides monitoring capabilities and gives more detailed insights into system operations.

With tools like Istio or Linkerd, organizations can manage traffic, enforce policies, and handle security measures seamlessly across clusters. A service mesh simplifies observing and controlling service interactions, thus improving reliability and performance.

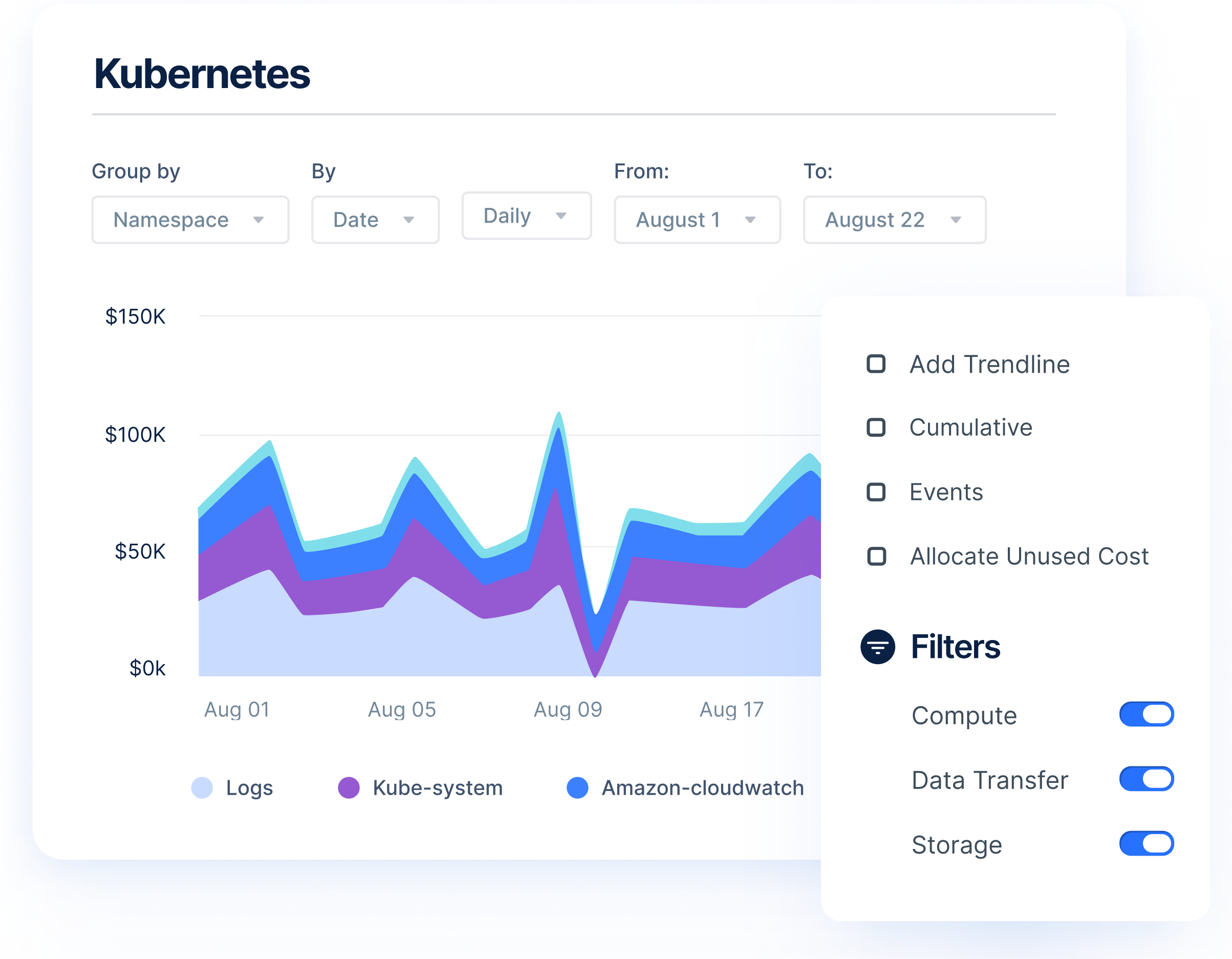

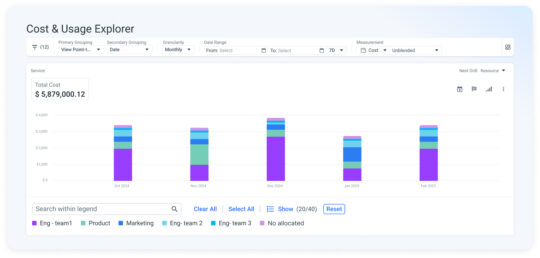

7. Use a Cost Management Platform

A cost management platform is essential for controlling and optimizing expenses in a multi-cloud Kubernetes environment. With workloads running across multiple cloud providers, cost tracking can become complicated due to differing pricing models and resource usage patterns. A dedicated cost management platform aggregates and analyzes these expenses, providing clear insights into spending across all clouds.

Tools like Umbrella help organizations identify areas of inefficiency, allowing them to make data-driven decisions to optimize resource allocation and reduce unnecessary costs. By continuously monitoring spending, businesses can implement cost-saving strategies, such as right-sizing infrastructure or using reserved instances, ensuring that they remain within budget while maximizing the value of their multi-cloud architecture.

Learn more in our detailed guide to Kubernetes cost optimization

Multi-Cloud Kubernetes Cost Management with Umbrella

Umbrella is a cloud cost management platform that provides deep visibility and cost optimization for Kubernetes workloads across multiple clouds:

- Combines Kubernetes cost visibility, allocation, and optimization into a single platform.

- Provides granular insights down to container-level detail, enabling precise cost attribution and control.

- Leverages AI/ML-driven recommendations and forecasting for proactive cost management.

- Empowers FinOps and engineering teams with shared data and actionable insights to align decisions with business goals.

- Supports multi-stakeholder collaboration to drive scalable improvements across Kubernetes and multi-cloud infrastructures.