What Is AWS Optimization?

AWS optimization involves using Amazon Web Services more effectively to achieve improved performance, lower cost, and better security. On AWS, the cloud cost optimization process includes analyzing the current infrastructure, identifying areas for improvement, and implementing changes.

AWS optimization is not a one-time fix, so best practices should be used for consistent optimization. Regularly assessing and adjusting AWS resources ensures they align with business goals. This involves leveraging AWS tools and having a third-party cloud optimization service to monitor and modify resource allocation.

This is part of a series of articles about AWS cost.

Key Areas of AWS Optimization

Cost Optimization

Cost optimization focuses on reducing AWS expenses without sacrificing performance or functionality. Techniques include selecting cost-effective services, taking advantage of pricing options like reserved instances and spot instances, and employing cost-monitoring tools. Organizations can save substantially by optimizing resources and eliminating waste while maintaining needed capabilities.

Cost optimization also involves assessing current usage and scaling resources to match demand. Implementing practices like instance right-sizing and auto-scaling helps avoid over-provisioning. By regularly analyzing and adjusting resource allocation, funds can be spent more wisely, improving overall operational efficiency and budget adherence.

Performance Optimization

Performance optimization aims to enhance the efficiency and speed of AWS resources. It includes selecting appropriate instance types, optimizing resource configurations, and leveraging performance-enhancing AWS services. By improving system responsiveness and reducing latency, organizations can deliver superior user experiences.

Remember, performance optimization requires continuous monitoring and analysis of application and infrastructure performance. Applying load balancing and caching techniques can enhance system capacity and reliability.

Storage Optimization

Storage optimization focuses on efficient management of AWS storage resources. This involves selecting optimal storage types, managing lifecycle policies, and using Amazon S3 Intelligent Tiering. By utilizing the most suitable storage solutions, organizations can reduce costs and improve data accessibility.

Proactive approaches such as data compression and eliminating redundant storage further maximize efficiency. Additionally, conducting regular audits of stored data allows businesses to identify unused or obsolete items, providing optimal storage utilization.

Security Optimization

Security optimization ensures AWS environments are protected against threats. This involves configuring AWS security services such as identity and access management (IAM) and Security Hub, and regularly reviewing security settings. Prioritizing security optimization helps prevent data breaches and supports compliance with regulatory standards.

Security optimization also includes implementing network security measures, security check automation, conducting regular vulnerability assessments, and employing encryption to enhance protection against unauthorized access.

Related content: Read our guide to AWS cost optimization

AWS Optimization Tools and Services

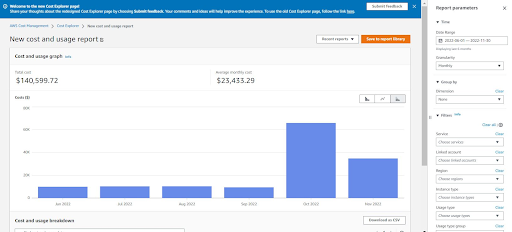

1. AWS Cost Explorer

AWS Cost Explorer is a tool to help organizations analyze, visualize, and manage their AWS expenses. It provides detailed insights into cost and usage patterns, enabling users to identify trends, uncover cost drivers, and make informed decisions. The tool supports filtering and grouping by service, account, or region, making it easier to drill down into specific areas of spending. Its predictive capabilities allow users to forecast future costs based on historical data.

Key features include:

- Interactive dashboards for tracking cost and usage trends.

- Granular filtering by service, region, or tags to pinpoint cost drivers.

- Cost and usage forecasting to estimate future expenses.

- Support for Reserved Instance (RI) and Savings Plans analysis.

Source: Amazon

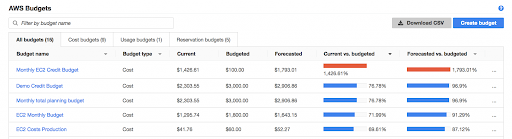

2. AWS Budgets

AWS Budgets is a financial management tool that enables organizations to define and track custom cost, usage, and savings thresholds. With real-time alerts, users can respond proactively to potential budget overruns. The tool integrates with AWS Cost Explorer, providing additional context for budget deviations and enabling more precise financial planning. AWS Budgets supports various budget types, such as cost allocation by project or team.

Key features include:

- Custom budget thresholds based on cost, usage, or RI/Savings Plans.

- Alerts via email or Amazon SNS when budgets are exceeded or nearing limits.

- Integration with AWS Cost Explorer for detailed budget tracking.

- Support for multiple budget types, including cost, usage, and RI utilization.

Source: Amazon

3. AWS Trusted Advisor

AWS Trusted Advisor acts as a virtual consultant, offering personalized recommendations to optimize AWS environments. It evaluates resource configurations against AWS best practices, spanning cost optimization, performance enhancement, security, and fault tolerance. Trusted Advisor identifies underutilized resources, security risks, and configuration missteps, providing actionable guidance to address these issues.

Key features include:

- Checks for cost-saving opportunities, such as underutilized resources.

- Security checks for misconfigurations, such as overly permissive IAM roles.

- Performance recommendations to improve system responsiveness.

- Automated fault tolerance and service limit checks.

Source: Amazon

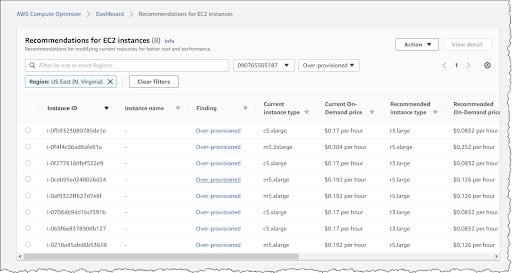

4. AWS Compute Optimizer

AWS Compute Optimizer leverages machine learning to analyze the performance of AWS compute resources and recommend optimal configurations. It helps identify instances that are underutilized or over-provisioned, providing actionable insights to improve cost efficiency and application performance. The tool evaluates metrics such as CPU, memory, and network utilization, suggesting alternative instance types or sizes that better match workload requirements.

Key features include:

- Recommendations for instance right-sizing based on workload performance.

- Analysis of CPU, memory, and network utilization patterns.

- Integration with Amazon EC2, Lambda, and Auto Scaling Groups.

- Detailed insights for workload optimization and cost savings.

Source: Amazon

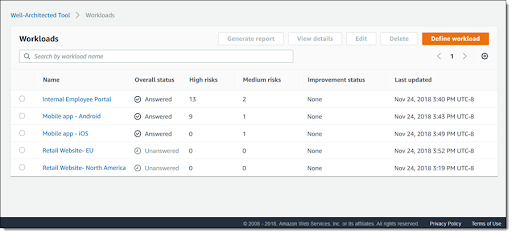

5. AWS Well-Architected Tool

The AWS Well-Architected Tool helps organizations assess and improve their cloud architecture in alignment with the AWS Well-Architected Framework. By conducting guided reviews, users can identify areas of weakness and receive prioritized recommendations for improvement. The tool covers critical pillars such as operational excellence, security, and cost optimization.

Key features include:

- Guided reviews of AWS workloads based on the Well-Architected Framework.

- Actionable recommendations for improving architecture design.

- Centralized dashboard for managing and prioritizing improvement tasks.

- Integration with service-specific best practices and insights.

Source: Amazon

6. AWS Auto Scaling

AWS Auto Scaling enables organizations to dynamically adjust AWS resources to align with fluctuating workloads. By automatically scaling up during traffic spikes and scaling down during periods of low demand, the tool helps maintain application performance while minimizing costs. It supports multiple AWS services, including EC2, ECS, and DynamoDB, providing a centralized interface for managing scaling policies.

Key features include:

- Automatic scaling of EC2 instances, ECS tasks, and DynamoDB tables.

- Predictive scaling based on machine learning models.

- Centralized interface for configuring and managing scaling policies.

- Cost-effective resource allocation to prevent over- or under-provisioning.

Source: Amazon

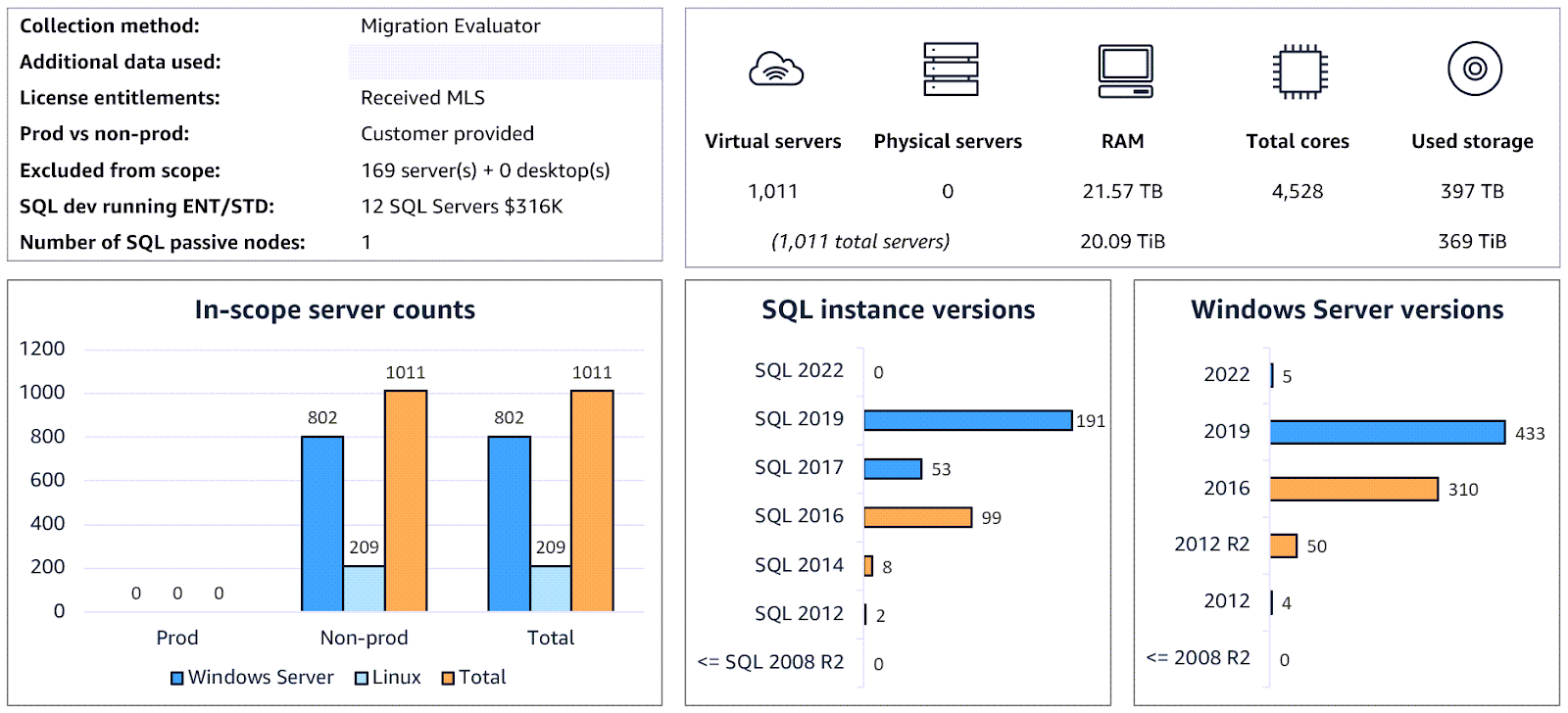

7. AWS Optimization and Licensing Assessment

AWS Optimization and Licensing Assessment (OLA) is a service designed to evaluate on-premises workloads and identify cost-saving opportunities during migration to AWS. By analyzing current resource usage and licensing requirements, OLA provides tailored recommendations for optimizing resource allocation in the cloud. This tool is particularly useful for organizations running Microsoft workloads, offering strategies to optimize licensing costs and ensure compliance.

Key features include:

- In-depth workload analysis for migration planning.

- Licensing optimization for Microsoft workloads on AWS.

- Cost estimates for cloud migration scenarios.

- Recommendations for right-sizing and resource allocation.

Source: Amazon

Common Challenges of AWS Optimization

Lack of Visibility into Resource Usage

A major challenge in AWS optimization is insufficient visibility into resource utilization. Organizations may overlook inefficiencies without clear insights into usage patterns, such as idle instances or misconfigured storage. This lack of awareness can lead to unnecessary expenses and hinder performance.

To address this, businesses should implement monitoring and reporting tools like AWS CloudWatch and AWS Cost Explorer. These tools provide detailed metrics and visualizations of resource consumption, helping to identify inefficiencies. Additionally, tagging resources with meaningful metadata enables better tracking and accountability.

Resistance to Change in Workflows

Resistance to modifying existing workflows can impede AWS optimization efforts. Teams may be reluctant to adopt new tools or adjust established processes, fearing disruptions or increased workloads.

Overcoming resistance requires clear communication of the benefits of optimization, such as cost savings and performance improvements. Engaging stakeholders early and providing training on AWS best practices can help build confidence in the changes. Gradual implementation of optimizations with measurable milestones supports smoother transitions.

Overcoming Complexity in Hybrid or Multi-Cloud Environments

Managing hybrid or multi-cloud environments adds a layer of complexity to AWS optimization. Different platforms have unique configurations, pricing models, and management tools, making it challenging to achieve consistent performance and cost-efficiency across all systems.

Organizations should adopt unified monitoring and management solutions, such as AWS Systems Manager or third-party multi-cloud tools to simplify this complexity. Establishing standardized processes for deploying and scaling resources across platforms reduces inconsistencies.

Best Practices for AWS Optimization

1. Right-Sizing Resources

Right-sizing resources involve aligning the capacity of AWS services with actual workload requirements. This process ensures the efficient use of compute, memory, and storage resources, minimizing costs while maintaining or improving performance. For example, workloads running on oversized EC2 instances can be shifted to smaller, less expensive instances without impacting performance. Similarly, consolidating workloads onto fewer resources can optimize utilization rates.

AWS provides tools like Compute Optimizer and Trusted Advisor to identify underutilized or overprovisioned resources and recommend adjustments. Organizations should periodically analyze historical usage data to identify patterns and fine-tune resource allocations.

2. Choose Appropriate Amazon S3 Storage Classes

Selecting the right Amazon S3 storage class is a critical step in optimizing storage costs. AWS offers multiple classes, each suited to different usage patterns: S3 Standard for frequent access, S3 Standard-IA and S3 One Zone-IA for infrequent access, and S3 Glacier and Glacier Deep Archive for long-term archival. S3 Intelligent-Tiering dynamically transitions objects between storage classes based on usage patterns, reducing costs for unpredictable workloads.

Organizations should assess data access requirements and retention policies to determine the most suitable class for their needs. For instance, storing log files or backups that are rarely accessed in Glacier can drastically lower expenses compared to keeping them in S3 Standard. Regular reviews of data lifecycle policies and access trends ensure data remains in the most appropriate and cost-effective class.

3. Use Reserved and Spot Instances

Utilizing Reserved Instances (RIs) and Spot Instances effectively can result in substantial cost savings for compute resources. RIs offer significant discounts (up to 75%) in exchange for a long-term commitment of one or three years, making them ideal for stable, predictable workloads. By contrast, Spot Instances provide up to 90% savings but are designed for flexible, fault-tolerant applications due to the risk of interruptions when AWS reclaims the capacity.

Combining RIs and Spot Instances with On-Demand Instances can optimize both costs and operational flexibility. For example, a steady base workload can use RIs, while fluctuating demands can be handled by Spot Instances. AWS Cost Explorer and EC2 Spot Fleet help identify and manage these opportunities.

4. Monitor and Analyze Resource Usage

Monitoring and analyzing AWS resource usage is key to identifying inefficiencies and ensuring optimal operation. Tools such as AWS CloudWatch, AWS Cost Explorer, and Trusted Advisor provide actionable insights into resource utilization, application performance, and spending patterns. These insights can uncover issues like idle resources, underperforming applications, or unexpected cost spikes, enabling timely corrective actions.

Regularly analyzing trends helps organizations anticipate future needs and adjust scaling policies accordingly. For example, businesses can avoid over-provisioning by configuring auto-scaling groups based on historical demand patterns. Establishing dashboards and alerting mechanisms makes it possible to notify teams of anomalies in real-time.

5. Implement Tagging Strategies

Tagging strategies provide a structured way to organize and manage AWS resources by assigning key-value pairs as metadata. For instance, tags like Owner: TeamA, Environment: Production, or CostCenter: Finance can clarify resource ownership, usage purpose, and financial accountability. This organization simplifies monitoring, reporting, and cost allocation while enabling automation of tasks like cleanup scripts for unused resources.

To maximize the effectiveness of tagging, organizations should implement a standardized tagging policy enforced across all accounts and regions. AWS tools like Tag Editor and AWS Config can assist in managing and auditing tags to ensure compliance. By using consistent tags, businesses gain better visibility into their cloud environment, streamline operations, and enhance accountability.

6. Automate Infrastructure Management

Automation of infrastructure management streamlines operations, reduces manual intervention, and enforces consistency across AWS environments. Tools like AWS CloudFormation, AWS Lambda, and AWS Auto Scaling enable repetitive tasks such as provisioning, scaling, patching, and monitoring to be automated. Automation reduces human error, accelerates deployment processes, and allows teams to focus on strategic initiatives.

For example, using infrastructure as code (IaC) with CloudFormation templates allows businesses to deploy entire architectures reproducibly and quickly. Auto Scaling adjusts applications dynamically to meet workload demands, while AWS Lambda can automate routine maintenance tasks, such as backups or log cleanup. By integrating automation into their workflows, organizations can achieve higher efficiency, better resource utilization, and faster response times, all while maintaining cost-effectiveness.

7. Leverage Third-Party Cost Optimization Tools

Third-party cost optimization tools enhance functionality beyond AWS native tools, offering deeper insights, cross-platform capabilities, and advanced automation features. Unlike AWS tools that focus exclusively on its ecosystem, third-party solutions often support multi-cloud environments, enabling organizations to optimize costs across AWS, Azure, Google Cloud, and more. This is especially beneficial for businesses operating in hybrid or multi-cloud setups, where a unified view of expenses and resource usage is crucial.

These tools also bring advanced analytics, such as machine learning-driven recommendations, anomaly detection, and predictive cost modeling, which can go beyond the capabilities of AWS offerings. Advanced tools provide granular visibility into spending, automatically suggest underutilized resources, and even perform proactive optimizations like instance right-sizing or workload migration to Spot Instances.

Taking AWS Optimization to the Next Level with Umbrella

Umbrella is a cloud optimization solution that supports AWS and other leading cloud providers to provide holistic cloud cost management:

- Seamlessly integrates your cloud cost data from AWS, Azure, and GCP to build a single source of truth over the public and private cloud.

- Gain complete visibility into your multi-cloud environment.

- Uncover hidden waste, optimize resource allocation, and make data-driven decisions across AWS, Azure, and GCP.

- AWS award-winning partner: Umbrella won Amazon’s 2024 EMEA Partner Awards, demonstrating its strong commitment to the AWS ecosystem.